the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Distributed environments for ocean forecasting: the role of cloud computing

Stefania Ciliberti

Gianpaolo Coro

Cloud computing offers an opportunity to innovate traditional methods for provisioning of scalable and measurable computed resources as needed by operational forecasting systems. It offers solutions for more flexible and adaptable computing architecture, for developing and running models, and for managing and disseminating data to finally deploy services and applications. The review discusses the key characteristic of cloud-computing-related on-demand self-service, network access, resource pooling, elasticity, and measured services. Additionally, it provides an overview of existing service models and deployments methods (e.g., private cloud, public cloud, community cloud, and hybrid cloud). A series of examples from the weather and ocean community are also briefly outlined, demonstrating how specific tasks can be mapped on specific cloud patterns and which methods are needed to be implemented depending on the specific adopted service model.

- Article

(1135 KB) - Full-text XML

- BibTeX

- EndNote

Cloud computing presents an opportunity to rethink traditional approaches used in operational oceanography (Vance et al., 2016), since it can enable a more flexible and adaptable computing architecture for observations and predictions, offering new ways for scientists to observe and predict the state of the ocean and, consequently, to build innovative downstream services for end users and policy makers. Operational ocean forecasting systems (OOFSs) are sustained by a solid backbone composed of satellite and marine observation networks for Earth observations (i.e., data) and state-of-the-art numerical models (i.e., tools) that deliver products according to agreed standards (i.e., ocean predictions, indicators, etc.): the workflow is well represented by the ocean value chain, as described in Bahurel et al. (2010) and Alvarez Fanjul et al. (2022). OOFSs massively use high-performance computing (HPC) to process data and run tools, whose results are shared and validated according to agreed data standards and methodologies, which can result in a remarkable computational cost, not always affordable for research institutes and organizations. Additionally, when building services, it is also important to guarantee lower latency, cost efficiency, and scalability, together with reliability and efficiency. In such framework, cloud computing can represent an opportunity for expanding the capabilities of forecasting centers in managing, producing, processing, and sharing ocean data. It implies adopting, evolving, and sustaining standards and best practices to enhance management of the ocean value chain, to optimize the OOFS processes, and to allow rationalization of requirements and specifications to properly account for operating a forecasting system (Pearlman et al., 2019).

Cloud technology has dramatically evolved in the last decades: the private sector has extensively used cloud computing for enabling scalability and security, leveraging it for artificial intelligence (AI) and machine learning (ML) frameworks, Internet of Things (IoT) integration, and HPC to optimize and innovate operations. It plays also a crucial role in enhancing data interoperability and FAIR (findable, accessible, interoperable, and reusable; Wilkinson et al., 2016) principles, through standardization of formats, APIs, and access protocols, ensuring that datasets can be easily shared, accessed, and reused by researchers globally.

Considering OOFSs, the computational and programming models offered by cloud computing can largely support real-time data processing, scalable model runs, data sharing, and elastic operations, facilitating the integration of AI/ML techniques (Heimbach et al., 2025, in this report) and the development of applications for the blue economy and society (Veitch et al., 2025, in this report) in operational frameworks. More in detail, cloud computing can provide a powerful and collaborative platform for the development and running of operational models, for management and dissemination of data, for building and deploying services to downstream business and applications, and finally for analyses and visualization of oceanographic products, enabling researchers to tackle larger and more complex problems without the burden of building and maintaining computing and storage infrastructures. However, challenges such as data transfer latency, security, and potential vendor lock-in must be addressed, including the high costs for running complex modeling systems.

This paper explores today's capabilities in cloud computing technology with an outlook on the benefit and challenges in adopting this paradigm in OOFSs. The remainder of this paper is organized as follows: Sect. 2 presents cloud computing foundational key concepts, highlighting some existing initiatives from the private sector; Sect. 3 discusses opportunities and challenges for ocean prediction in adopting cloud technologies, presenting existing international initiatives worldwide as examples; and Sect. 4 concludes this paper.

2.1 A brief history of cloud computing

Cloud computing is a specialized form of distributed computing that introduces utilization models for remotely provisioning scalable and measured computing resources (e.g., networks, servers, storage, applications, and services) (Mahmood et al., 2013), offering organizations different benefits for their business services and applications: scalability, cost savings, flexibility and agility, reliability and availability, collaboration and accessibility, innovation and experimentation, and sustainability.

The term “cloud computing” originated as a metaphor for the Internet, which is, in essence, a network of networks providing remote access to a set of decentralized IT resources. In the early 1960s, John McCarthy introduced the concept of computing as a utility:

If computers of the kind I have advocated become the computers of the future, then computing may someday be organized as a public utility just as the telephone system is a public utility. … The computer utility could become the basis of a new and important industry.

This idea opened the concept of having services on the Internet so users could benefit of them for their applications. In the same period, Joseph Carl Robnett Licklider envisioned a world where interconnected systems of computers could communicate and interoperate: that was the milestone of the modern cloud computing. In the late 1990s, Ramnath Chellappa introduced for the first time the term “cloud computing” as a new computing paradigm (Chellappa, 1997), “where the boundaries of computing will be determined by economic rationale rather than technical limits alone”, dealing with concepts such as expandable and allocatable resources that can ensure cost efficiency, scalability, and business value. In the same period, Compaq Computer Corporation adopted the concept of the “cloud” in its business plan as a term for evolving the technological capacity of the company itself in offering new scalable and expandable services to customers over the Internet. The last 2 decades have been characterized by a rapid expansion of cloud computing: while the general public has been leveraging forms of Internet-based computer utilities since the mid-1990s as form of search engines, e-mail services, social media platforms, etc., it was not until 2006 that the term “cloud computing” emerged, when Amazon launched its Simple Storage Service (Amazon S3) followed by the Elastic Compute Cloud (Amazon EC2) service, enabling organizations to lease computing capacity and storage to run their business applications. In 2008, Google launched the Google App Engine, a cloud computing platform used as a service for developing and hosting web applications; then, in 2010 Microsoft launched Azure as a cloud computing platform and service provider that provides scalable, on-demand resources to customers to build applications globally; in 2012, Google launched the Google Compute Engine which enables users to launch virtual machines (VMs) on demand.

To understand the framework over which cloud computing is built, it is fundamental to refer to the standards and best practices provided by the North American National Institute of Standard and Technology (NIST) (Mell and Grance, 2011):

cloud computing is a model for enabling ubiquitous, convenient, on-demand network access to a shared pool of configurable computing resources that can be rapidly provisioned and released with minimal management effort or service provider interaction.

NIST further elaborates on cloud computing providing a cloud computing reference architecture based on five essential characteristics, three service models, and four deployment models.

2.2 An outlook to NIST definitions

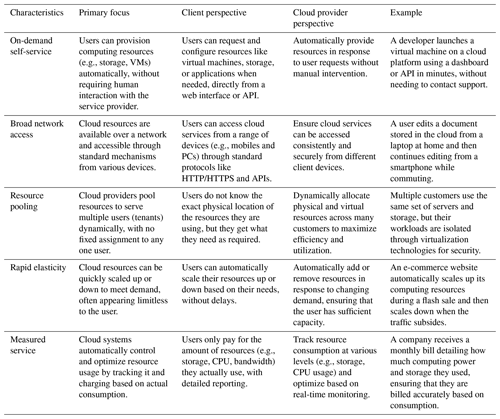

Cloud computing essential characteristics defined by NIST can be considered reference guidelines for both providers and clients to ensure scalable, cost-effective, and accessible resources to fit specific needs. Table 1 shows a summary of the essential characteristics' definitions as provided in Mell and Grance (2011), offering the client and provider's perspectives with some examples that show how cloud solutions ensure scalability, flexibility, and efficiency.

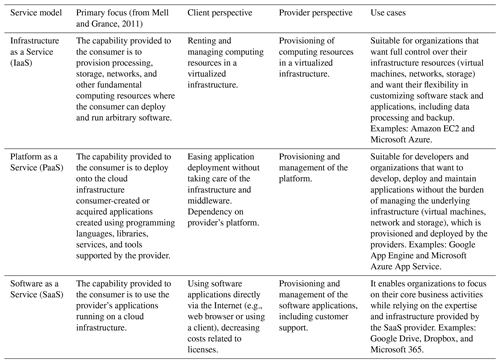

NIST specifies three possible cloud services models: Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS). They define the foundational cloud services' characteristic client needs to ensure adequate levels of management, flexibility, and control. Table 2 presents service models' definitions as provided in Mell and Grance (2011), discussing examples where they are used.

Besides the NIST definitions, similar to PaaS another service model is the serverless model (or Function as a Service – FaaS), which is the capability provided to the user to abstract infrastructure concerns away from applications, where developers can implement application functionality as invocable functions/services whilst providers automatically provision, deploy, and scale these services based on a range of criteria, including efficiency, cost, and load balancing. Examples of serverless/FaaS services are AWS Lambda (https://aws.amazon.com/lambda, last access: 29 April 2025) and Fargate (https://aws.amazon.com/fargate, last access: 29 April 2025), Microsoft Azure Functions (https://azure.microsoft.com/en-us/products/functions, last access: 29 April 2025), Google Cloud Functions (https://cloud.google.com/functions, last access: 29 April 2025), and Scaleway Serverless Functions (https://www.scaleway.com/en/serverless-functions, last access: 29 April 2025).

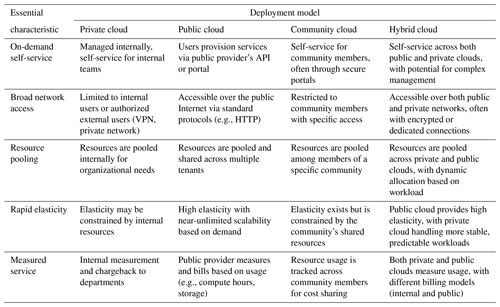

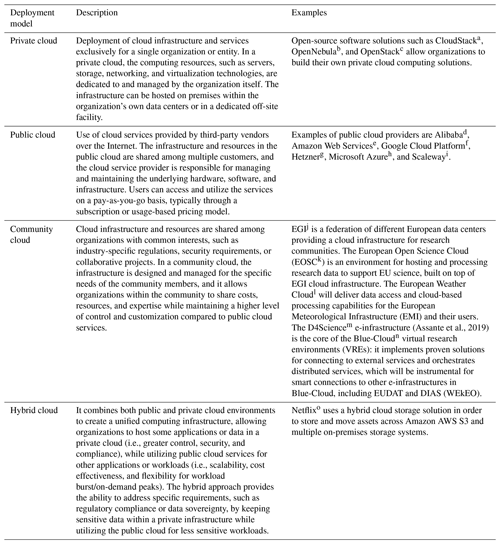

Cloud computing deployment models can be based on different approaches, offering organizations options for workload placement, application development, and resource allocation to optimize their cloud strategy based on their needs, cost considerations, performance requirements, compliance regulations, and desired level of control. The four cloud computing deployment models identified by NIST are reported in Table 3 with a description and some examples.

Table 3NIST cloud computing deployment models.

a https://cloudstack.apache.org (last access: 29 April 2025). b https://opennebula.io (last access: 29 April 2025). c https://www.openstack.org (last access: 29 April 2025). d https://www.alibabacloud.com (last access: 29 April 2025). e https://aws.amazon.com (last access: 29 April 2025). f https://cloud.google.com (last access: 29 April 2025). g https://www.hetzner.com/cloud (last access: 29 April 2025). h https://azure.microsoft.com (last access: 29 April 2025). i https://www.scaleway.com/en (last access: 29 April 2025). j https://www.egi.eu (last access: 29 April 2025). k https://eosc.eu (last access: 29 April 2025). l https://www.europeanweather.cloud (last access: 29 April 2025). m https://www.d4science.org/ (last access: 29 April 2025). n https://www.blue-cloud.org/e-infrastructures/d4science (last access: 29 April 2025). o https://aws.amazon.com/solutions/case-studies/netflix-storage-reinvent22 (last access: 29 April 2025).

Besides the cloud deployment models identified by NIST, there are few other approaches that are worth mentioning that provide further capabilities to the organizations that decide to embrace cloud technology.

Multi-cloud computing refers to the strategy of using multiple cloud service providers, allowing organizations leveraging the services of two or more public/private cloud providers or a of combination public–private cloud providers, combining their offerings to build and manage their applications and infrastructure. This approach allows businesses to take advantage of the strengths and capabilities of different cloud providers, such as cost effectiveness, performance, geographic coverage, or specialized services. It also offers increased flexibility and redundancy, and it mitigates the risk of vendor lock-in (Hong et al., 2019). Multi-cloud solutions, which can be based on open-source technologies such as Kubernetes, offer the possibility to ease migration of applications, improving portability since they support containerization and microservices. Major challenges include the complexity in the management of the infrastructure, issues with integration and interoperability, and security. The edge-computing paradigm enables data analyzing, storage, and offloading computations near the edge devices (such as Internet of Things – IoT – devices, sensors, and mobile devices) to improve response time and save bandwidth (Pushpa and Kalyani, 2020). This approach aims at minimizing the data volume to process in the cloud, reducing network costs and bandwidth utilization, and increasing reliability and scalability. Major challenges include the complexity in the management of the edge devices, security potentially affected by devices' vulnerability, and synchronization of communications between edge devices and cloud infrastructure.

Distributed cloud-edge computing, one of the main innovation streams for cloud computing, combines elements of cloud computing with edge computing, extending the capabilities of the traditional centralized cloud infrastructure by distributing cloud services closer to the edge of the network, where data are generated and consumed, rather than relying solely on centralized data centers. By moving cloud services closer to where data are generated, latency (defined as the delay in network communication) is reduced, allowing fast response times, and real-time or time-sensitive applications (e.g., collection of observations from automated sensors and systems for guaranteeing efficiency in operations; early warning systems for disaster management and safety) can benefit from faster response times and improved performance. This is especially crucial for applications requiring immediate data processing and low latency. Recently, public cloud providers started to offer pre-configured appliances (e.g., AWS Outpost, Azure Stack) that bring the power of the public cloud to the private and edge cloud and have defined collaborations with telcos (e.g., AWS and Vodafone, Google and AT&T) to create 5G edge services. Furthermore, the main open-source cloud management platforms provide extensions (OpenNebula ONEedge, OpenStack StarlingX, Kubernetes KubeEdge) for enhancing private clouds with capabilities for automated provisioning of computing, storage, and networking resources and/or orchestrate virtualized and containerized application on the edge. Major challenges include ensuring data security across the distributed locations, for a safe communication between cloud and edge, and resource management and network reliability.

Based on NIST's definitions as discussed before, Table 4 summarizes how the five essential characteristics apply across the four deployment models (public, private, hybrid, and community cloud) to support the selection of the right cloud model with respect to efficiency in costs and performances, security, and management.

Cloud-native applications – which are built, run, and maintained using tools, techniques, and technologies for cloud computing – provide abstraction from underlying infrastructure and enhanced scalability, flexibility, and reliability, which are strongest in public and hybrid cloud models. Cloud-native application development is driven by new software models, such as microservices and serverless, and is made possible through technologies such as containers (i.e., Docker, https://www.docker.com/, last access: 29 April 2025) and container orchestration tools (i.e., Kubernetes), which are becoming the de facto leading standards for packaging, deployment, scaling, and management of enterprise and business applications on cloud computing infrastructures.

Following the rise of containerization in enterprise environments, the adoption of container technologies has gained momentum in technical and scientific computing, including high-performance computing (HPC). Containers can address many HPC problems (Mancini and Aloisio, 2015): however, security and performance overhead represent some current limits in using containerization in HPC environment (Chung et al., 2016; Abraham et al., 2020). Several container platforms have been created to address the needs of the HPC community, such as Shifter (Jacobsen and Canon, 2015), Singularity (Kurtzer et al., 2017) (now Apptainer), Charliecloud (Priedhorsky and Randles, 2017), and Sarus (Benedicic et al., 2019). Recently, Podman (https://podman.io/, last access: 29 April 2025) has been analyzed to investigate its suitability in the context of HPC (Gantikow et al., 2020), showing some promise in bringing a standard-based, multi-architecture enabled container engine to HPC.

Technological advancements in cloud computing and its foundational characteristics, services, and models can provide enormous advantages for operational oceanography across the ocean architectures.

Vance et al. (2019) explored uses of the cloud for managing and analyzing observational data and models workflows: for instance, they show how cloud platforms can be supportive during the collection and the quality control of observations, reducing the risk of power outages, network connectivity, or other issues related to weather conditions at sea that can compromise transmissions from sensors to the base station. Large-scale datasets related to forecast and observational oceanographic products can be stored in cloud-native storages (e.g., S3 Object Storage) and accessed from any location with public connectivity, enabling data-proximate computations (Ramamurthy, 2018). This approach facilitates data-proximate computations (Ramamurthy, 2018), allowing analysis to be performed near the data source using remote resources rather than requiring extensive local downloads and infrastructure (Zhao et al., 2015).

Nowadays, the Digital Twin of the Ocean (DTO) framework is revolutionizing ocean services, acting as a bridge between the current digitalization of processes and the future intelligence. DTO is empowering the use of advanced technologies, such as artificial intelligence (AI) and cloud computing, for industrializing and informatizing the marine sector while supporting operations from data pooling to data processing, with a final direct benefit for applications (Chen et al., 2023). It is then of paramount importance to understand how modern computing technologies can support scientific investigation, enhance ocean forecasting services, and contribute to the evolution of such systems.

To achieve this goal, analysis patterns theorized by Fowler (1997) and described for e-science by Butler and Merati (2016) can be applied, in a simplified way, to the ocean value chain (Alvarez Fanjul et al., 2022) explaining the added value of adopting cloud-based solutions to improve operational forecasting workflows.

The term “analysis pattern” focuses on organizational aspects of a system since they are crucial for requirement analysis. Geyer-Schulz and Hahsler (2001) designed a specific template for analysis patterns: starting from that and the examples proposed by Butler and Merati (2016) for e-science, we propose an initial analysis of cloud patterns (CPs) for the cloud-based OOFS processes, taking the ocean value chain components as a reference framework.

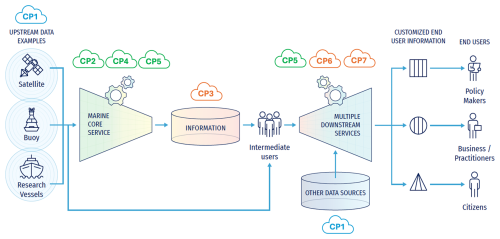

Figure 1The ocean value chain and associated cloud patterns (adapted from Alvarez Fanjul et al., 2022).

The following are some initial identified cloud patterns, which are mapped in Fig. 1.

-

CP1: cloud-based management of ocean data for OOFSs. This is devoted to the integration into forecasting services of the cloud-based approach, facilitating the access to large volumes of diverse, current, and authoritative data. It addresses challenges related to locating and using large amounts of scientific data. It is particularly useful for data managers that needs to provide upstream data to forecasters for running one or more models, or for performing validation of the numerical results. It can be implemented on the hybrid/public cloud, and the design can be based on PaaS or SaaS (data access as a service). It enables seamless integration of upstream data from multiple sources (including observations and forcings data used in model applications).

-

CP2: cloud-based computing infrastructure for OOFSs. It explores cloud-based platforms and tools for running computationally intensive numerical models and procedures used for forecasting services. It benefits numerical modelers and forecasters that require high-performance computing (HPC) to run a model application that can include AI/ML pre-/post-processing. It can be implemented on a private cloud, adopting IaaS service models. It enhances the execution of the Marine Core Service by optimizing computing resources such as CPU/GPU, networking, and storage.

-

CP3: cloud-based management of ocean data produced by OOFSs. Designed for storing and managing geospatial ocean data in the cloud, this component addresses the challenge of the growing data volume with limited budgets dedicated to data management. It is valuable for data managers that need to store forecast products, including model results in native format, for further analysis and processing. Data can be stored in dedicated file systems or databases and accessible through APIs (including GIS-based ones). It can be implemented on a private cloud, using the PaaS service model. It ensures efficient storage and accessibility of data produced by the Marine Core Service, made available for dissemination to users.

-

CP4: cloud-based computing infrastructure for OOFS disaster recovery in the cloud. Focused on leveraging cloud computing in the ocean forecast production pipeline to enhance robustness and meet the growing demand for scalable computational resources. It can be used by forecasters that need OOFSs on demand under unexpected situations (e.g., working as backup in case the nominal unit is down). A private/hybrid cloud can be used, and the design can be based on PaaS or IaaS. This approach enhances the Marine Core Service by ensuring operational continuity and timely dissemination of forecast products.

-

CP5: analysis of OOFS products in the cloud. Focused on performing analysis and processing of ocean data in the cloud, facilitating multi-model intercomparisons and quality assessment, even in case of larger datasets and/or datasets from multiple sources. It is beneficial for product quality experts and data analysts in charge of quality control or for providing a private-cloud-based service for pre-qualification of ocean products. It can be implemented through a hybrid/private cloud, and the design can be based on SaaS. It supports the Marine Core Service quality assurance and downstream services through tailored user-oriented metrics or indicators for downstream applications.

-

CP6: visualization of OOFS in the cloud. Devoted to integration of cloud-based visualization capabilities to process and publish ocean products via the (cloud) service. It also addresses the need for visualizing larger amounts of data. It can be useful for data engineers and forecasters that need to create user-friendly visualizations for end users and policy makers. It can be implemented using a private/public cloud, and the design can be based on SaaS. It supports downstream services by providing an interactive visualization service and tailored user-oriented visual bulletins for end users.

-

CP7: product dissemination and outreach in the cloud. Devoted to the use cloud-based platforms and tools for dissemination of OOFS products to different audiences – scientific and non-scientific. This is useful for communication experts that need to use a cloud-based repository for sharing insights and digital material produced using OOFS products. It uses hybrid/private cloud solutions, and the design can be based on SaaS. It enhances multiple downstream services by providing customized and accessible end-user information for policy-making, business, society.

Most of the challenges generically introduced in Sect. 2 can be still pertinent when adopting cloud computing solutions for OOFS.

-

Data security. Processing oceanographic data might generate sensible information that requires proper management. In addition, downstream services might require the use of data from governmental or research institutes that need to be preserved and possibly not shared.

-

Costs. While cloud computing can reduce upfront infrastructure costs, it can become expensive for continuous, long-term use or for HPC tasks that require significant computational power.

-

Latency and bandwidth limitations. Ingesting or assessing a large volume of ocean data on centralized cloud data centers might affect OOFSs' performances due to poor network connection.

-

Dependence on cloud providers (vendor lock-in). Deployment of OOFS on specific cloud providers might lead to vendor lock-in, complicating migration to another cloud provider due to proprietary technologies, APIs, or data format.

-

Regulatory and compliance issues. Cloud providers must comply with various regulatory frameworks, and using a public cloud for OOFS might complicate compliance with data protection laws or environmental regulations or even with licenses.

-

Limited control over hardware. Cloud users do not have direct control over the underlying hardware, which may be a disadvantage when HPC resources need fine-tuned optimization to run OOFS.

-

Impact on code refactoring. Adapting OOFS to a cloud environment may require significant code refactoring to optimize for distributed computing, cloud-native architectures, and specific provider APIs, potentially increasing development effort and complexity.

In the following, some US and EU programs, initiatives, and projects are reported as examples on how cloud computing technologies and patterns have been used to provide services to the oceanographic and scientific community in general.

3.1 NOAA Open Data Dissemination and Big Data Program

NOAA's Open Data Dissemination (NODD, https://www.noaa.gov/nodd, last access: 29 April 2025) Program is designed to facilitate public use of key environmental datasets by providing copies of NOAA's information in the cloud, allowing users to do analyses of data and extract information without having to transfer and store these massive datasets themselves. NODD started out as the Big Data Project in April 2015 (and then later became the Big Data Program); NODD currently works with three IaaS providers (Amazon Web Services (AWS), Google Cloud Platform, and Microsoft Azure) to broaden access to NOAA's data resources. These partnerships are designed to not only facilitate full and open data access at no net cost to the taxpayer but also foster innovation by bringing together the tools necessary to make NOAA's data more readily accessible. There are over 220 NOAA datasets on the cloud service provider (CSP) platforms. The datasets are organized by the NOAA organization that generated the original dataset (https://www.noaa.gov/nodd/datasets, last access: 29 April 2025).

3.2 Copernicus Service and Data and Information Access Services

Copernicus (https://www.copernicus.eu, last access: 29 April 2025) is the Earth observation component of the EU Space Programme, looking at the Earth and its environment to benefit all European citizens. Copernicus generates on a yearly basis petabytes of data and information that draw from satellite Earth observation and in situ (non-space) data. The up-to-date information provided by the core services (atmosphere, https://atmosphere.copernicus.eu/, last access: 29 April 2025; climate change, https://climate.copernicus.eu/, last access: 29 April 2025; marine, https://marine.copernicus.eu/, last access: 29 April 2025; land, https://land.copernicus.eu/en, last access: 29 April 2025; security, https://www.copernicus.eu/en/copernicus-services/security, last access: 29 April 2025; and emergency, https://emergency.copernicus.eu/, last access: 29 April 2025) is free and openly accessible to users. As the data archives grow, it becomes more convenient and efficient not to download the data anymore but to analyze them where they are originally stored.

To facilitate and standardize access to data, the European Commission has funded the deployment of five cloud-based platforms (CREODIAS, https://creodias.eu/, last access: 29 April 2025; Mundi, https://mundiwebservices.com/, last access: 29 April 2025; Onda, https://www.onda-dias.eu/cms/, last access: 29 April 2025; Sobloo, https://engage.certo-project.org/sobloo-overview/, last access: 29 April 2025; and WEkEO, https://www.wekeo.eu/, last access: 29 April 2025), known as Data and Information Access Services (DIAS; https://www.copernicus.eu/en/access-data/dias, last access: 29 April 2025) that provide centralized access to Copernicus data and information, as well as to processing tools. The DIAS platforms provide users with a large choice of options to benefit from the data generated by Copernicus: to search, visualize, and further process the Copernicus data and information through a fully maintained software environment while still having the possibility to download the data to their own computing infrastructure. All DIAS platforms provide access to Copernicus Sentinel data, as well as to the information products from the six operational services of Copernicus, together with cloud-based tools (open source and/or on a pay-per-use basis). Thanks to a single access point for all the Copernicus data and information, DIAS platforms allow the users to develop and host their own applications in the cloud, while removing the need to download bulky files from several access points and process them locally.

3.3 Blue-Cloud

The European Open Science Cloud (EOSC) provides a virtual environment with open and seamless access to services for storage, management, analysis, and reuse of research data, across borders and disciplines. Blue-Cloud aims at developing a marine thematic EOSC to explore and demonstrate the potential of cloud-based open science for better understanding and managing the many aspects of ocean sustainability (https://blue-cloud.org/news/blue-clouds-position-paper-eosc, last access: 29 April 2025). The Blue-Cloud platform, federating European blue data management infrastructures (SeaDataNet, https://www.seadatanet.org/, last access: 29 April 2025; EurOBIS, https://www.eurobis.org/, last access: 29 April 2025; Euro-Argo ERIC, https://www.euro-argo.eu/, last access: 29 April 2025; Argo GDAC (Wong et al., 2020); EMODnet, https://emodnet.ec.europa.eu/en, last access: 29 April 2025; ELIXIR-ENA, https://elixir-europe.org/services/biodiversity, last access: 29 April 2025; Euro-BioImaging, https://www.eurobioimaging.eu/, last access: 29 April 2025; Copernicus Marine; Copernicus Climate Change; and ICOS-Marine, https://www.icos-cp.eu/observations/ocean/otc, last access: 29 April 2025) and horizontal e-infrastructures (EUDAT, https://www.eudat.eu/, last access: 29 April 2025; DIAS; D4Science), provides FAIR access to multidisciplinary data, analytical tools, and computing and storage facilities that support research. Blue-Cloud provides services through pilot demonstrators for oceans, seas, and freshwater bodies for ecosystems research, conservation, forecasting, and innovation in the blue economy, and it accelerates cross-discipline science, making innovative use of seamless access to multidisciplinary data, algorithms, and computing resources.

Cloud computing has been demonstrated to be a key driver in the digital evolution of the private sector, offering a baseline for expanding and scaling applications and services by enhancing scalability, cost efficiency, and data processing. Service models offer different layers for pushing technological evolution, where infrastructure/platform/software can be assimilated to services that can be deployed in different cloud models, depending on the specific needs of the users in keeping resources public or private or hybrid. By leveraging on-demand computing power, big data analytics, and global data accessibility and sharing, cloud computing improves business efficiency, scientific research, and innovation, benefiting society and business. Taking these concepts as granted, cloud computing can be seen as an opportunity for operational oceanography, for enhancing ocean prediction and monitoring by exploiting its collaborative framework to support blue economy, sustainable ocean management, and climate change mitigation actions. The simplified pattern analysis has revealed how OOFS architecture components can be implemented in a cloud environment without the burden of maintaining complex infrastructure: common tasks like processing and analyzing large datasets can be optimized in cloud-native storages, using software that can be integrated by AI/ML techniques for anomaly detection, or by means of specific APIs for data searching and retrieving. Cloud-based visualization and data delivery can ensure security especially for critical information that can impact decision-making, driving better-informed policies and responses in marine and coastal management.

Despite these advantages, several challenges remain, some of them partially solved with the implementation of existing deployments models (hybrid cloud, for instance): interoperability, which is one of the pillars for cloud-based environments, requires the definition of data standards and adoption of best practices. Security in data access/sharing as well as costs associated with running forecasting systems can raise constraints for vendor lock-in and long-term sustainability.

Promoting a collaborative framework among existing and new centers could be seen as one promising approach for fostering innovation, collaboration, and more efficient ocean prediction and monitoring: by leveraging shared cloud-based resources, forecasting centers can combine their expertise and share data and tools, supporting the creation of a “digital twin” of the ocean to use for a wide range of applications for managing and protecting our ocean.

No datasets were used in this article.

SC contributed to the conceptualization, writing, and validation. GP contributed to the writing and validation.

At least one of the (co-)authors is a member of the editorial board of State of the Planet. The peer-review process was guided by an independent editor, and the authors also have no other competing interests to declare.

Publisher's note: Copernicus Publications remains neutral with regard to jurisdictional claims made in the text, published maps, institutional affiliations, or any other geographical representation in this paper. While Copernicus Publications makes every effort to include appropriate place names, the final responsibility lies with the authors.

The authors are grateful to Marco Mancini for sharing his expert view on cloud computing technology and to Enrique Alvarez Fanjul for analyzing Sect. 3 and sharing his expert view on the impact of using cloud computing in support of operational oceanography. The authors also extend their gratitude to the reviewers, Alvaro Lorenzo Lopez and Miguel Charcos-Llorens, and the to the topic editor, Jay Pearlman, for their valuable and insightful comments that significantly improved this paper.

This paper was edited by Jay Pearlman and reviewed by Miguel Charcos-Llorens and Alvaro Lorenzo Lopez.

Abraham, S., Paul, A. K., Khan, R. I. S., and Butt, A. R.: On the Use of Containers in High Performance Computing Environments, 2020 IEEE 13th International Conference on Cloud Computing (CLOUD), 19–23 October 2020, Beijing, China, https://doi.org/10.1109/CLOUD49709.2020.00048, 2020.

Alvarez Fanjul, E., Ciliberti, S., and Bahurel, P.: Implementing Operational Ocean Monitoring and Forecasting Systems, IOC-UNESCO, GOOS-275, https://doi.org/10.48670/ETOOFS, 2022.

Assante, M., Candela, L., Castelli, D., Cirillo, R., Coro, G., Frosini, L., Lelii, L., Mangiacrapa, F., Pagano, P., Panichi, G., and Sinibaldi, F.: Enacting open science by D4Science, Future Gener. Comp. Sy., 101, 555–563, https://doi.org/10.1016/j.future.2019.05.063, 2019.

Bahurel, P., Adragna, F., Bell, M., Jacq, F., Johannessen, J., Le Traon, P.-Y., Pinardi, N., and She, J.: Ocean Monitoring and Forecasting Core Services, the European MyOcean Example, Proceedings of OceanObs'09: Sustained Ocean Observations and Information for Society, 21–25 September 2009, Venice, Italy, https://doi.org/10.5270/OceanObs09.pp.02, 2010.

Benedicic, L., Cruz, F. A., Madonna, A., and Mariotti, K.: Sarus: Highly Scalable Docker Containers for HPC Systems, in: High Performance Computing, ISC High Performance 2019, Lecture Notes in Computer Science, editd by: Weiland, M., Juckeland, G., Alam, S., and Jagode, H., Springer, Cham, vol. 11887, https://doi.org/10.1007/978-3-030-34356-9_5, 2019.

Butler, K. and Merati, N.: Chapter 2 – Analysis patterns for cloud centric atmospheric and ocean research, in: Cloud Computing in Ocean and Atmospheric Sciences, Academic Press, Elsevier, 15–34, https://doi.org/10.1016/B978-0-12-803192-6.00002-5, 2016.

Chellappa, R.: Intermediaries in cloud-computing: A new computing paradigm, INFORMS Dallas 1997, Cluster: Electronic Commerce, Dallas, Texas, 1997.

Chen, G., Yang, J., Huang, B., Ma, C., Tian, F., Ge, L., Xia, L., and Li, J.: Toward digital twin of the ocean: from digitalization to cloning, Intell. Mar. Technol. Syst., 1, 3, https://doi.org/10.1007/s44295-023-00003-2, 2023.

Chung, M. T., Quang-Hung, N., Nguyen, M.-T., and Thoai, N.: Using Docker in high performance computing applications, 2016 IEEE Sixth International Conference on Communications and Electronics (ICCE), 27–29 July 2016, Ha-Long, Vietnam, https://doi.org/10.1109/CCE.2016.7562612, 2016.

Fowler, M.: Analysis Patterns: Reusable Object Models, Object Technology Series, Addison–Wesley Publishing Company, Reading, ISBN 978-0-201-89542-1, 1997.

Gantikow, H., Walter, S., and Reich, C.: Rootless Containers with Podman for HPC, in: High Performance Computing, ISC High Performance 2020, Lecture Notes in Computer Science, edited by: Jagode, H., Anzt, H., Juckeland, G., and Ltaief, H., Springer, Cham, 12321, https://doi.org/10.1007/978-3-030-59851-8_23, 2020.

Geyer-Schulz, A. and Hahsler, M.: Software Engineering with Analysis Patterns, Working Papers on Information Systems, Information Business and Operations, 01/2001, Institut für Informationsverarbeitung und Informationswirtschaft, WU Vienna University of Economics and Business, Vienna, https://doi.org/10.57938/b8d9c0a2-92e7-489f-bec9-b08485ed7c42, 2001.

Heimbach, P., O'Donncha, F., Smith, T., Garcia-Valdecasas, J. M., Arnaud, A., and Wan, L.: Crafting the Future: Machine Learning for Ocean Forecasting, in: Ocean prediction: present status and state of the art (OPSR), edited by: Álvarez Fanjul, E., Ciliberti, S. A., Pearlman, J., Wilmer-Becker, K., and Behera, S., Copernicus Publications, State Planet, 5-opsr, 22, https://doi.org/10.5194/sp-5-opsr-22-2025, 2025.

Hong, J., Dreibholz, T., Schenkel, J. A., and Hu, J. A.: An Overview of Multi-cloud Computing, in: Web, Artificial Intelligence and Network Applications, edited: Barolli, L., Takizawa, M., Xhafa, F., and Enokido, T., WAINA 2019, Advances in Intelligent Systems and Computing, vol. 927, Springer, Cham, https://doi.org/10.1007/978-3-030-15035-8_103, 2019.

Jacobsen, D. M. and Canon, R. S.: Contain This, Unleashing Docker for HPC, Cray User Group 2015, https://www.nersc.gov/assets/Uploads/cug2015udi.pdf (last access: 29 July 2024), 2015.

Kurtzer, G. M., Sochat, V., and Bauer, M. W.: Singularity: Scientific containers for mobility of compute, PLOS ONE, 12, 1–20, https://doi.org/10.1371/journal.pone.0177459, 2017.

Mahmood Z., Puttini, R., and Erl, T.: Cloud Computing: Concepts, Technology & Architecture, 1st edn., Prentice Hall Press, USA, ISBN 9780133387520, 2013.

Mancini, M. and Aloisio, G.: How advanced cloud technologies can impact and change HPC environments for simulation, 2015 International Conference on High Performance Computing & Simulation (HPCS), 20–24 July 2015, Amsterdam, the Netherlands, 667–668, https://doi.org/10.1109/HPCSim.2015.7237116, 2015.

Mell, P. and Grance, T.: The NIST Definition of Cloud Computing, Computing Security Resource Center, NIST Special Publication 800-145, National Institute of Standards and Technology, https://doi.org/10.6028/NIST.SP.800-145, 2011.

Pearlman, J., Bushnell, M., Coppola, L., Karstensen, J., Buttigieg, P. L., Pearlman, F., Simpson, P., Barbier, M., Muller-Karger, F. E., Munoz-Mas, C., Pissierssens, P., Chandler, C., Hermes, J., Heslop, E., Jenkyns, R., Achterberg, E. P., Bensi, M., Bittig, H. C., Blandin, J., Bosch, J., Bourles, B., Bozzano, R., Buck, J. J. H., Burger, E. F., Cano, D., Cardin, V., Llorens, M. C., Cianca, A., Chen, H., Cusack, C., Delory, E., Garello, R., Giovanetti, G., Harscoat, V., Hartman, S., Heitsenrether, R., Jirka, S., Lara-Lopez, A., Lantéri, N., Leadbetter, A., Manzella, G., Maso, J., McCurdy, A., Moussat, E., Ntoumas, M., Pensieri, S., Petihakis, G., Pinardi, N., Pouliquen, S., Przeslawski, R., Roden, N. P., Silke, J., Tamburri, M. N., Tang, H., Tanhua, T., Telszewski, M., Testor, P., Thomas, J., Waldmann, C., and Whoriskey, F.: Evolving and Sustaining Ocean Best Practices and Standards for the Next Decade, Front. Mar. Sci., 6, 277, https://doi.org/10.3389/fmars.2019.00277, 2019.

Priedhorsky, R. and Randles, T.: Charliecloud: Unprivileged Containers for User-Defined Software Stacks in HPC, SC17: International Conference for High Performance Computing, Networking, Storage and Analysis, 12–17 November 2017, Denver, CO, USA, 1–10, https://doi.org/10.1145/3126908.3126925, 2017.

Pushpa, J. and Kalyani, S. A.: Chapter Three – Using fog computing/edge computing to leverage Digital Twin, Advances in Computers, 117, 51–77, https://doi.org/10.1016/bs.adcom.2019.09.003, 2020.

Ramamurthy, M. K.: Data-Proximate Computing, Analytics, and Visualization Using Cloud-Hosted Workflows and Data Services, American Meteorological Society, 98th Annual Meeting, 6–11 January 2018, Austin, USA, https://ams.confex.com/ams/98Annual/webprogram/Paper337167.html (last access: 29 July 2024), 2018.

Vance, T. C., Merati, N., Yang, C., and Yuan, M.: Cloud Computing in Ocean and Atmospheric Sciences, Academic Press, https://doi.org/10.1016/C2014-0-04015-4, 2016.

Vance, T. C., Wengren, M., Burger, E., Hernandez, D., Kearns, T., Medina-Lopez, E., Merati, N., O'Brien, K., O'Neil, J., Potemra, J. T., Signell, R. P., and Wilcox, K.: From the Oceans to the Cloud: Opportunities and Challenges for Data, Models, Computation and Workflows, Front. Mar. Sci., 6, 211, https://doi.org/10.3389/fmars.2019.00211, 2019.

Veitch, J., Alvarez-Fanjul, E., Capet, A., Ciliberti, S., Cirano, M., Clementi, E., Davidson, F., el Sarafy, G., Franz, G., Hogan, P., Joseph, S., Liubartseva, S., Miyazawa, Y., Regan, H., and Spanoudaki, K.: A description of Ocean Forecasting Applications around the Globe, in: Ocean prediction: present status and state of the art (OPSR), edited by: Álvarez Fanjul, E., Ciliberti, S. A., Pearlman, J., Wilmer-Becker, K., and Behera, S., Copernicus Publications, State Planet, 5-opsr, 6, https://doi.org/10.5194/sp-5-opsr-6-2025, 2025.

Wilkinson, M. D., Dumontier, M., Aalbersberg, I. J., Appleton, G., Axton, M., Baak, A., Blomberg, N., Boiten, J.-W., Bonino da Silva Santos, L., Bourne, P. E., Bouwman, J., Brookes, A. J., Clark, T., Crosas, M., Dillo, I., Dumon, O., Edmunds, S., Evelo, C. T., Finkers, R., Gonzalez-Beltran, A., Gray, A. J. S., Groth, P., Goble, C., Grethe, J. S., Heringa, J., Hoen, P. A. C., Hooft, R., Kuhn, T., Kok, R., Kok, J., Lusher, S. J., Martone, M. E., Mons, A., Packer, A. L., Persson, B., Rocca-Serra, P., Roos, M., van Schaik, R., Sansone, S.-A., Schultes, E., Sengstag, T., Slater, T., Strawn, G., Swertz, M. A., Thompson, M., van der Lei, J., van Mulligen, E., Velterop, J., Waagmeester, A., Wittenburg, P., Wolstencroft, K., Zhao, J., and Mons, B.: The FAIR Guiding Principles for scientific data management and stewardship, Sci. Data, 3, 160018, https://doi.org/10.1038/sdata.2016.18, 2016.

Wong, A. P. S., Wijffels, S. E., Riser, S. C., Pouliquen, S., Hosoda, S., Roemmich, D., Gilson, J., Johnson, G. C., Martini, K., Murphy, D. J., Scanderbeg, M., Bhaskar, T. V. S. U., Buck, J. J. H., Merceur, F., Carval, T., Maze, G., Cabanes, C., André, X., Poffa, N., Yashayaev, I., Barker, P. M., Guinehut, S., Belbéoch, M., Ignaszewski, M., Baringer, M. O. N., Schmid, C., Lyman, J. M., McTaggart, K. E., Purkey, S. G., Zilberman, N., Alkire, M. B., Swift, D., Owens, W. B., Jayne, S. R., Hersh, C., Robbins, P., West-Mack, D., Bahr, F., Yoshida, S., Sutton, P. J. H., Cancouët, R., Coatanoan, C., Dobbler, D., Garcia Juan, A., Gourrion, J., Kolodziejczyk, N., Bernard, V., Bourlès, B., Claustre, H., D'Ortenzio, F., Le Reste, S., Le Traon, P.-Y., Rannou, J.-P., Saout-Grit, C., Speich, S., Thierry, V., Verbrugge, N., Angel-Benavides, I. M., Klein, B., Notarstefano, G., Poulain, P.-M., Vélez-Belchí, P., Suga, T., Ando, K., Iwasaka, N., Kobayashi, T., Masuda, S., Oka, E., Sato, K., Nakamura, T., Sato, K., Takatsuki, Y., Yoshida, T., Cowley, R., Lovell, J. L., Oke, P. R., van Wijk, E. M., Carse, F., Donnelly, M., Gould, W. J., Gowers, K., King, B. A., Loch, S. G., Mowat, M., Turton, J., Rama Rao, E. P., Ravichandran, M., Freeland, H. J., Gaboury, I., Gilbert, D., Greenan, B. J. W., Ouellet, M., Ross, T., Tran, A., Dong, M., Liu, Z., Xu, J., Kang, K. R., Jo, H. J., Kim, S. D., and Park, H. M.: Argo Data 1999–2019: Two Million Temperature-Salinity Profiles and Subsurface Velocity Observations From a Global Array of Profiling Floats, Front. Mar. Sci., 7, 700, https://doi.org/10.3389/fmars.2020.00700, 2020.

Zhao, Y., Li, Y., Raicu, I., Lu, S., Tian, W., and Liu, H.: Enabling scalable scientific workflow management in the Cloud, Future Gener. Comp. Sy., 46, 3–16, 2015.